A leading Beauty Tech Brand partnered with listening247 to leverage AI-powered market intelligence and ad automation to guide brand positioning and 2026 campaign planning. This was achieved by gathering actionable consumer insights and campaign inspiration through monitoring global conversations about the brand, its competitors, and the broader wellness and beauty tech categories.

The Beauty Tech Brand wanted to understand how their brand was perceived globally compared to competitors and identify common themes within the wellness and beauty tech space. They required a scalable solution to analyze up to 68K+ posts over six months from diverse sources including social media, blogs, forums, and news.

listening247 deployed its proprietary AI engine, DataVinci, to gather and analyze real-time conversations in English across multiple platforms (Facebook, YouTube, TikTok, Instagram, X, Reddit, blogs, forums, reviews, news articles). DataVinci tagged conversations by brand, theme, and sentiment to uncover drivers of consumer perception and competitive positioning.

.webp)

In this podcast, Tom Griffiths, Founder and CEO of Synergy CFO Solutions, interviews Michalis A. Michael, Co-founder and CEO of listening247. They explore how listening247 analyzes real online customer conversations and leverages AI to generate ad prompts and campaign ideas, helping small and mid-sized businesses break through the creative bottleneck.

Listen Now On: YouTube

P.S. Have questions about the podcast? Contact us at: info@listening247.com. We’re happy to help!

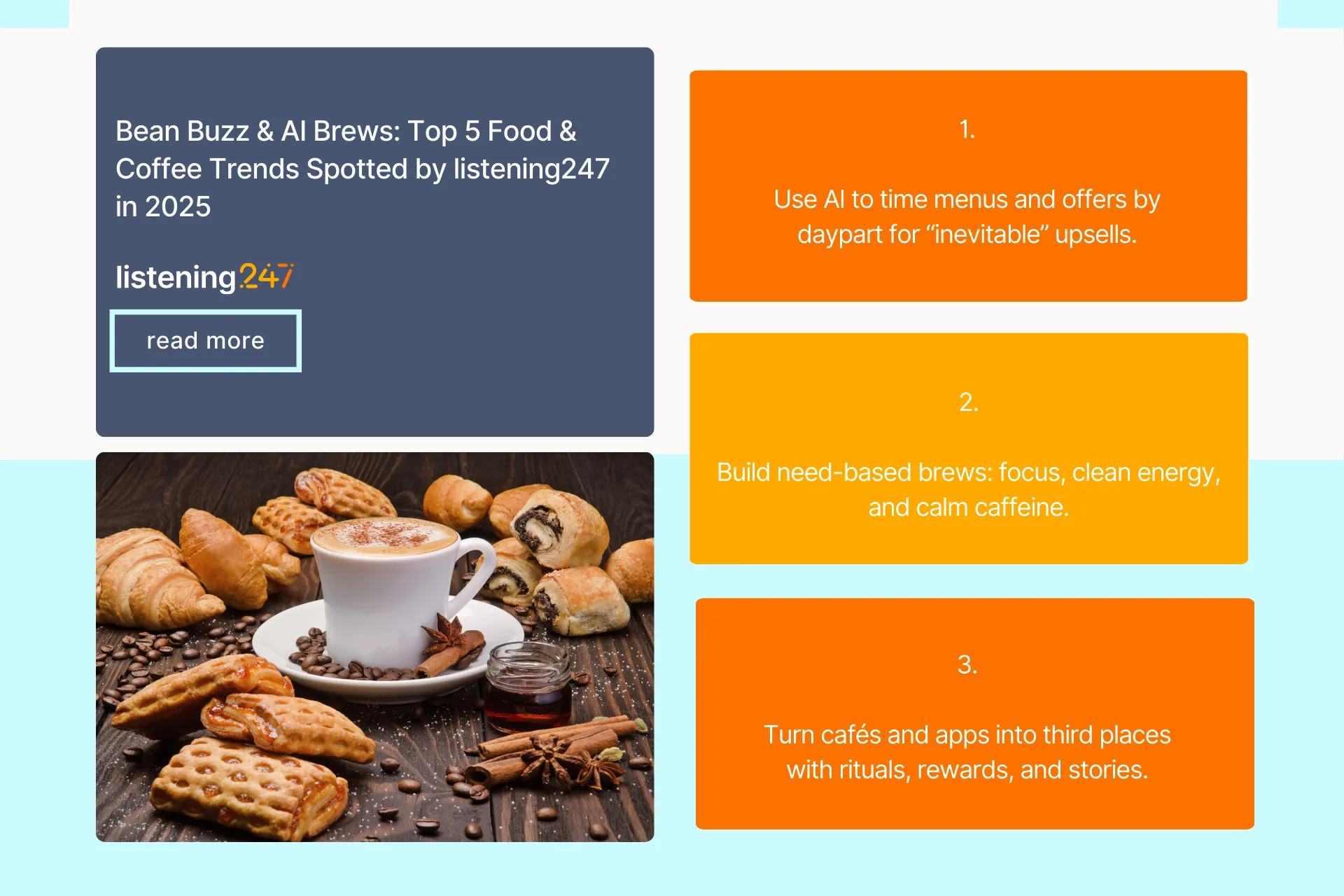

In food and coffee today, taste isn’t just on the tongue; it’s social, ritual, and smart.

From AI curated menus to functional brews, these are the top 5 trends shaping how people sip, snack, and share in 2025 across the UK, EU, and US.

listening247’s analysis of 47,000+ conversations from the second half of 2025 across X, Instagram, TikTok, Reddit, forums, and blogs reveals five key topics driving category discussion in coffee and food service:

Product innovation leads, reflecting a rising demand for specialty coffee, clean-label ready-to-drink (RTD) beverages, and playful flavor mashups. Coffee chains (for example, Starbucks, Costa, Pret) and specialty roasters are also innovating by using AI to personalize menus, forecast demand by daypart, and refine data-led marketing.

STRATEGIC TAKEAWAY: The better you know your customer, the easier it is to target them. Understanding micro moments (morning rush vs. afternoon slump), flavor tribes (nutty chocolate vs. bright citrus), and value signals (sustainability, wellness) turns broad audiences into highly targetable segments with higher conversion and loyalty.

Coffee chains and specialty roasters are adopting machine learning to:

Why it matters: precision targeting. When you know who buys what, when, and why, you tailor offers that feel inevitable, boosting attach rates and repeat orders.

Consumer talk around “focus,” “clean energy,” and “no jitters” is climbing across Reddit and TikTok. We see momentum for:

Strategic takeaway: segment by need (deep work mornings, gym pre or post, gentler PM pick me ups) rather than by demographic alone.

The “best taste” is emotional and digital. Loyalty apps and order ahead options are baseline; leaders differentiate with:

Strategic takeaway: map journeys by social channel and moment. For example: use TikTok to drive discovery with short-form content, then direct viewers to a free trial or introductory offer in your app. In-store, reinforce this with QR codes at the counter that capture feedback and unlock a discount, which you then use to tailor an ongoing subscription offer.

Coffee is culture; it anchors routines, identity, and “third place” belonging. A third place is a social space outside home (first place) and work (second place), where people feel comfortable, recognised, and part of a community.

Coffee spaces and rituals contribute to that sense of belonging through:

Strategic takeaway: lifestyle markers (remote work, fitness communities, eco conscious shoppers) predict beverage and food attach rates better than age brackets.

High-impact promotions do more than discount offers; they layer in story, exclusivity, and participation so customers feel part of something, not just getting a lower price:

Strategic takeaway: AI amplifies what resonates, optimizing message, audience, and timing across X, Instagram, and TikTok. The result: casual sippers become community members.

Across 47,000+ conversations from the second half of 2025 the UK, EU, and US coffee and food landscape is thriving at the intersection of craft and code. Brands that deeply understand customers, by moment, mindset, and micro culture, can target with precision, personalize at scale, and build communities where the “best brew” is sensory, digital, and deeply human.

Michalis A. Michael, Founder & CEO of Listening247 and author of the book “AI Powered Insights” coming out early 2026, will chair the Market Research Society’s one‑day conference on 22 January 2026 and deliver the extended opening address. He will speak about themes from his book, practical frameworks and case studies showing where AI adds real value to research and where human judgement must stay central, which is a key reason MRS invited him to lead the day. Expect a focused, evidence‑led programme shaped by that perspective.

Date: Thursday 22 January 2026

Venue: Radisson Blu Edwardian, Bloomsbury Street, London

Full programme & booking: MRS — AI: Powering‑Up Insights Conference 2026

Michalis A. Michael, Founder & CEO of listening247 and author of the upcoming book “AI Powered Insights,” will be a featured speaker at the Text Analytics Forum in Washington DC on 19 November 2025. He will share practical insights on how AI-driven text analytics is transforming business intelligence, highlighting real-world case studies and frameworks that balance AI innovation with human expertise. His session promises actionable takeaways for professionals navigating the evolving landscape of text analytics.

Date: Tuesday19 November 2025

Venue: Washington Marriott Georgetown, Washington DC

Full programme & booking: Text Analytics Forum 2025

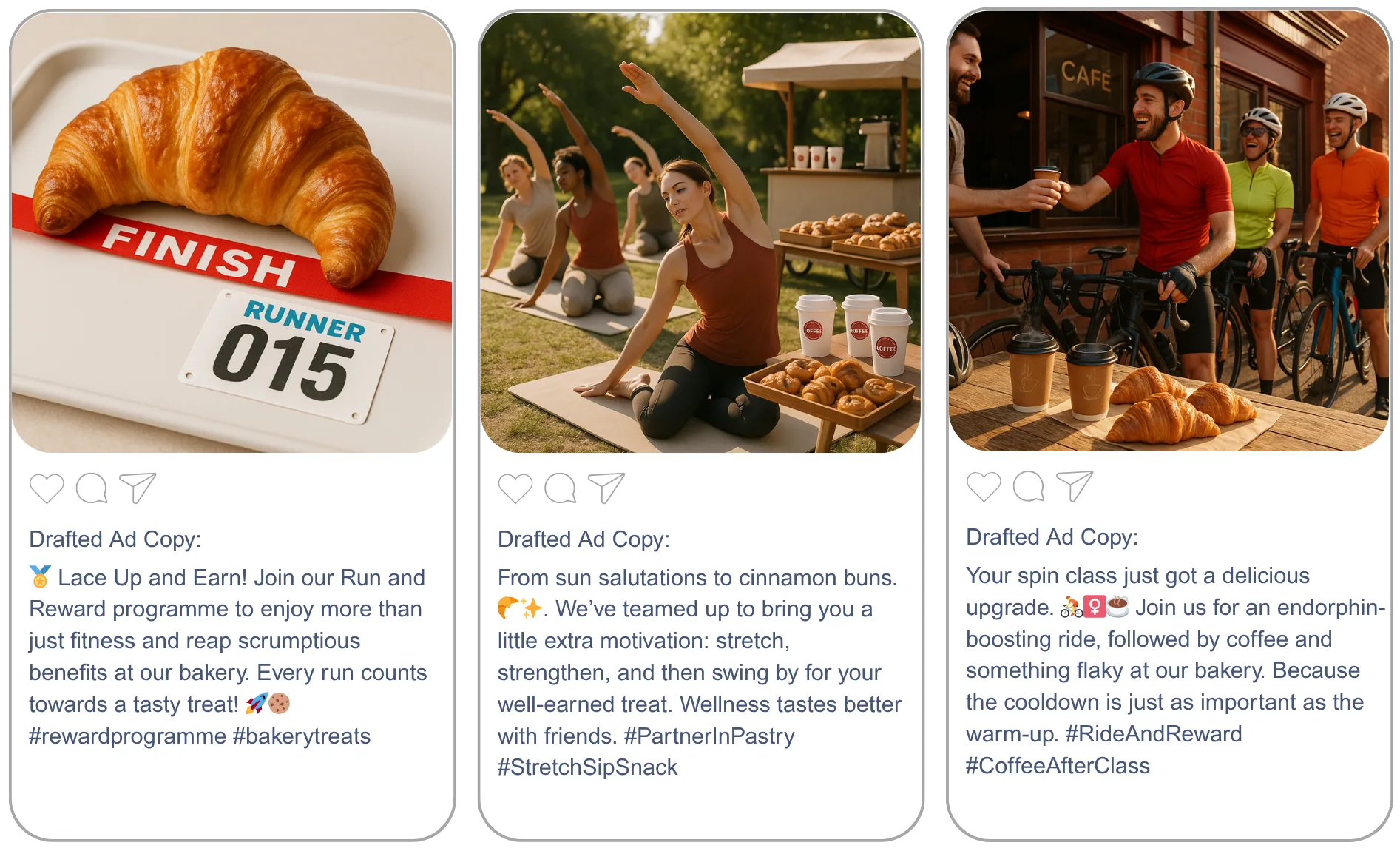

listening247 reveals key insights into how coffee and bakery brands monitor their social presence and drive smart marketing decisions by:

Using its proprietary Gen AI model, l247

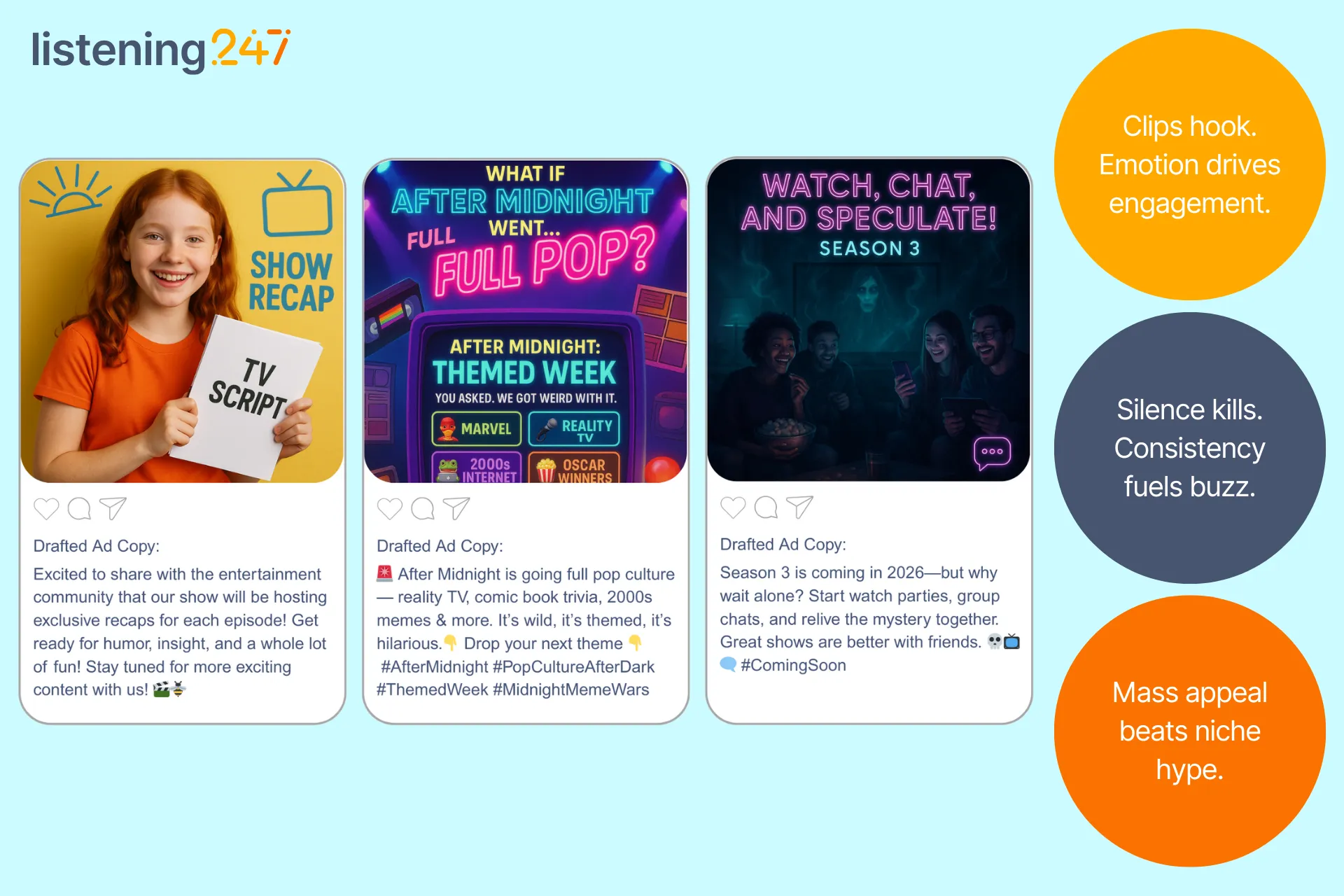

Based on identified conversations, L247 used its proprietary Gen AI model to create copy an imagery for ads:

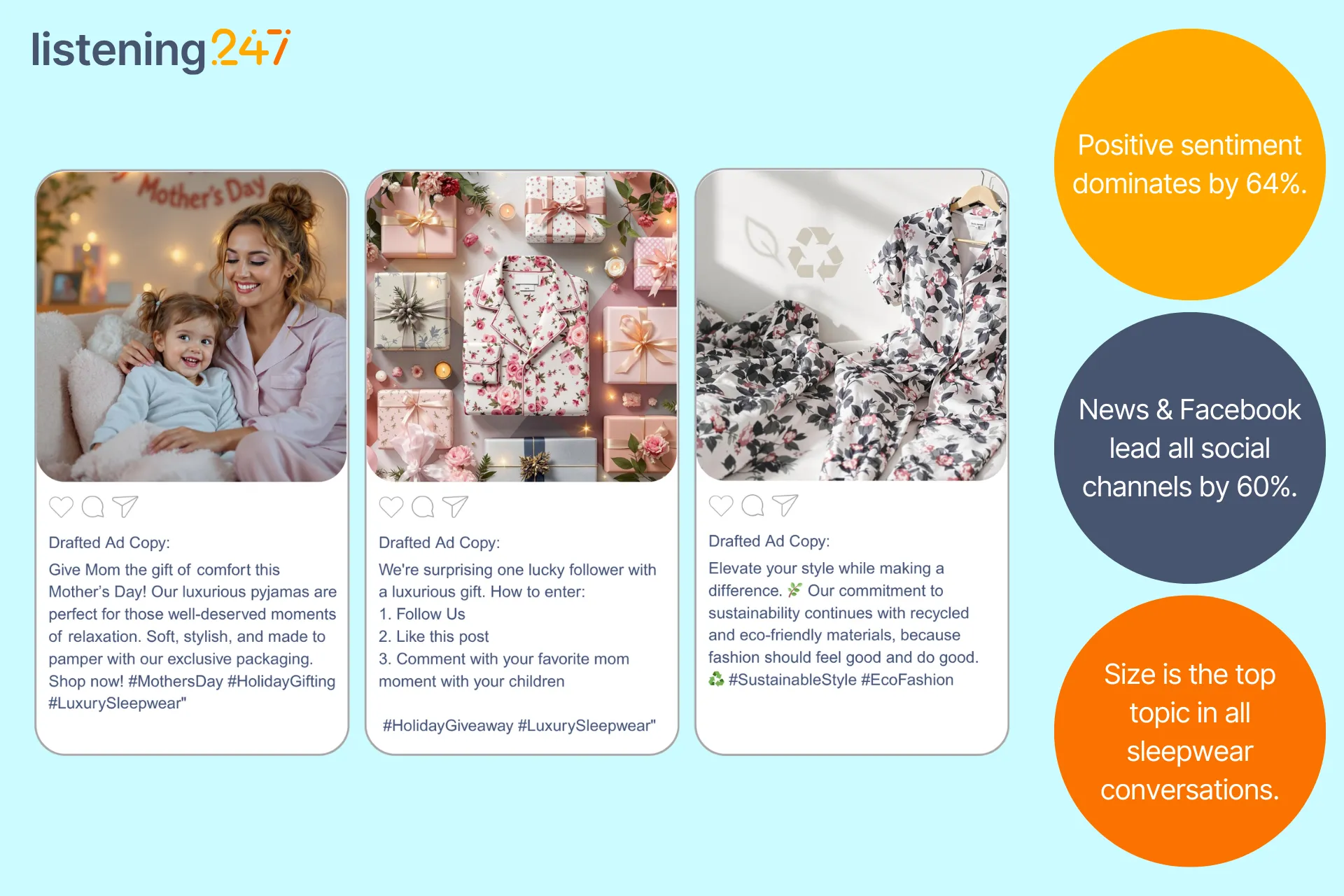

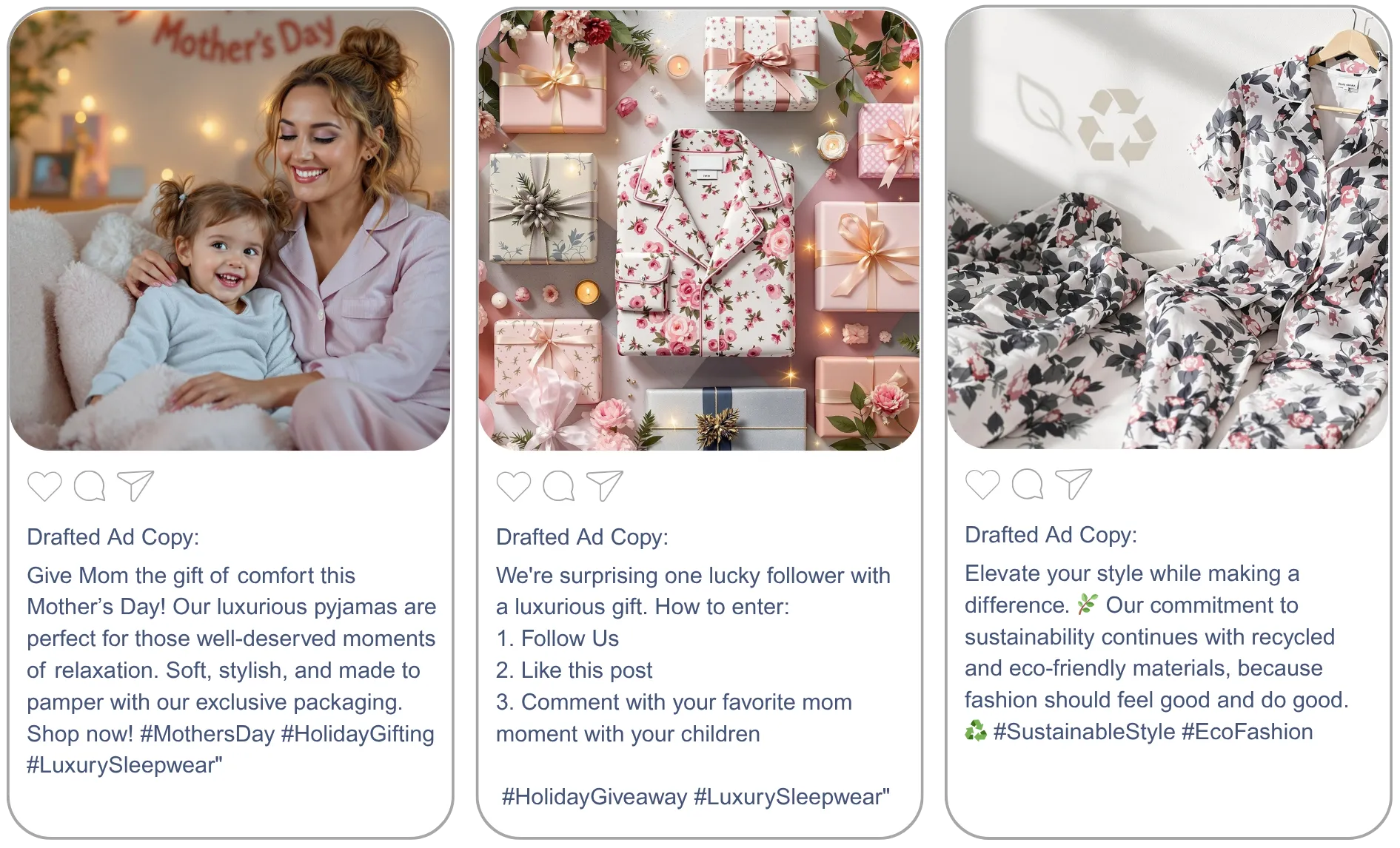

listening247 uncovered valuable insights about how brands in the sleepwear industry track their social presence and make informed marketing decisions by:

Using its proprietary Gen AI model, l247

Based on identified conversations, L247 used its proprietary Gen AI model to create copy an imagery for ads:

.webp)

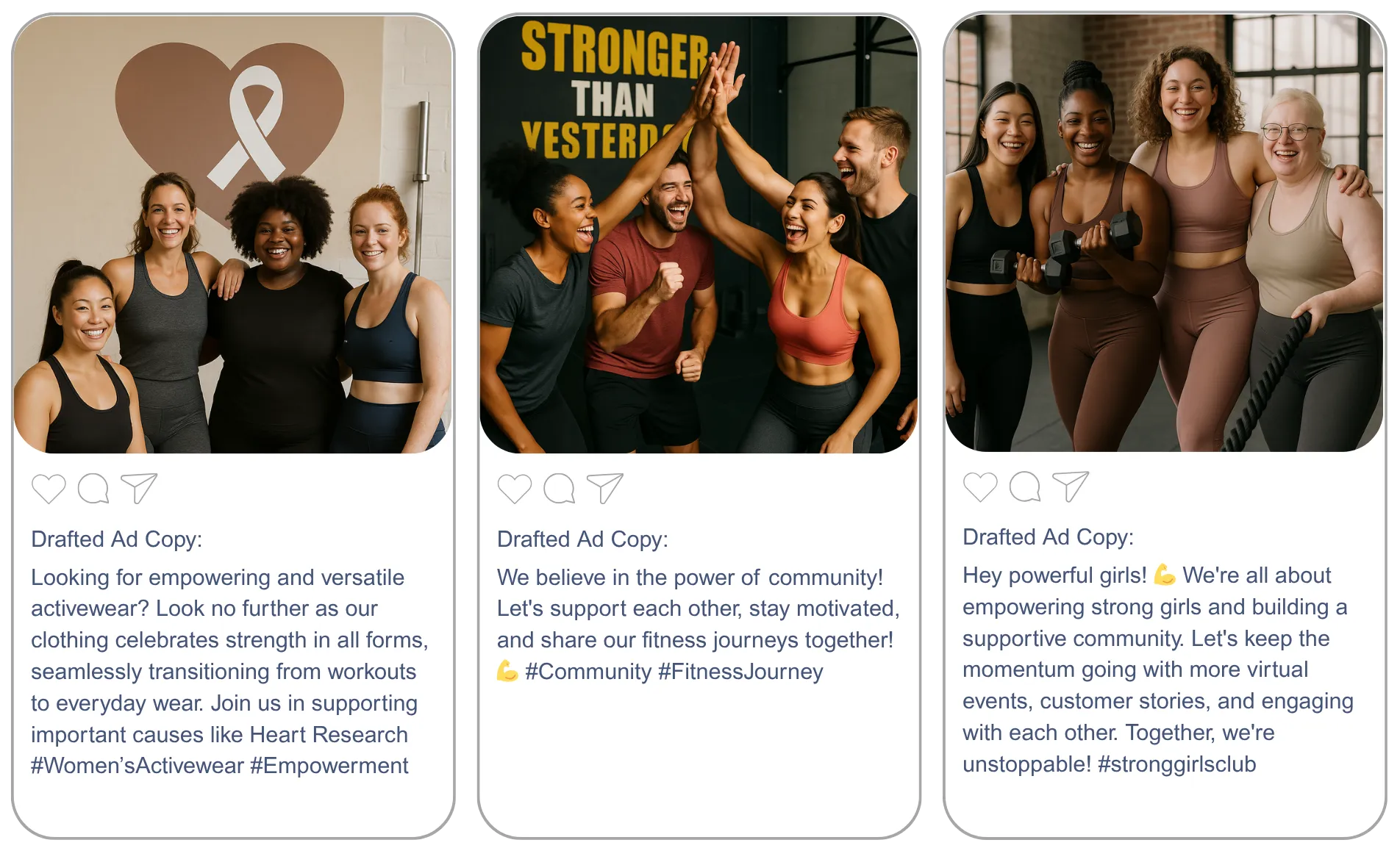

listening247 created insight reports for the women’s activewear industry to:

Using its proprietary Gen AI model, l247

Based on identified conversations, L247 used its proprietary Gen AI model to create copy an imagery for ads:

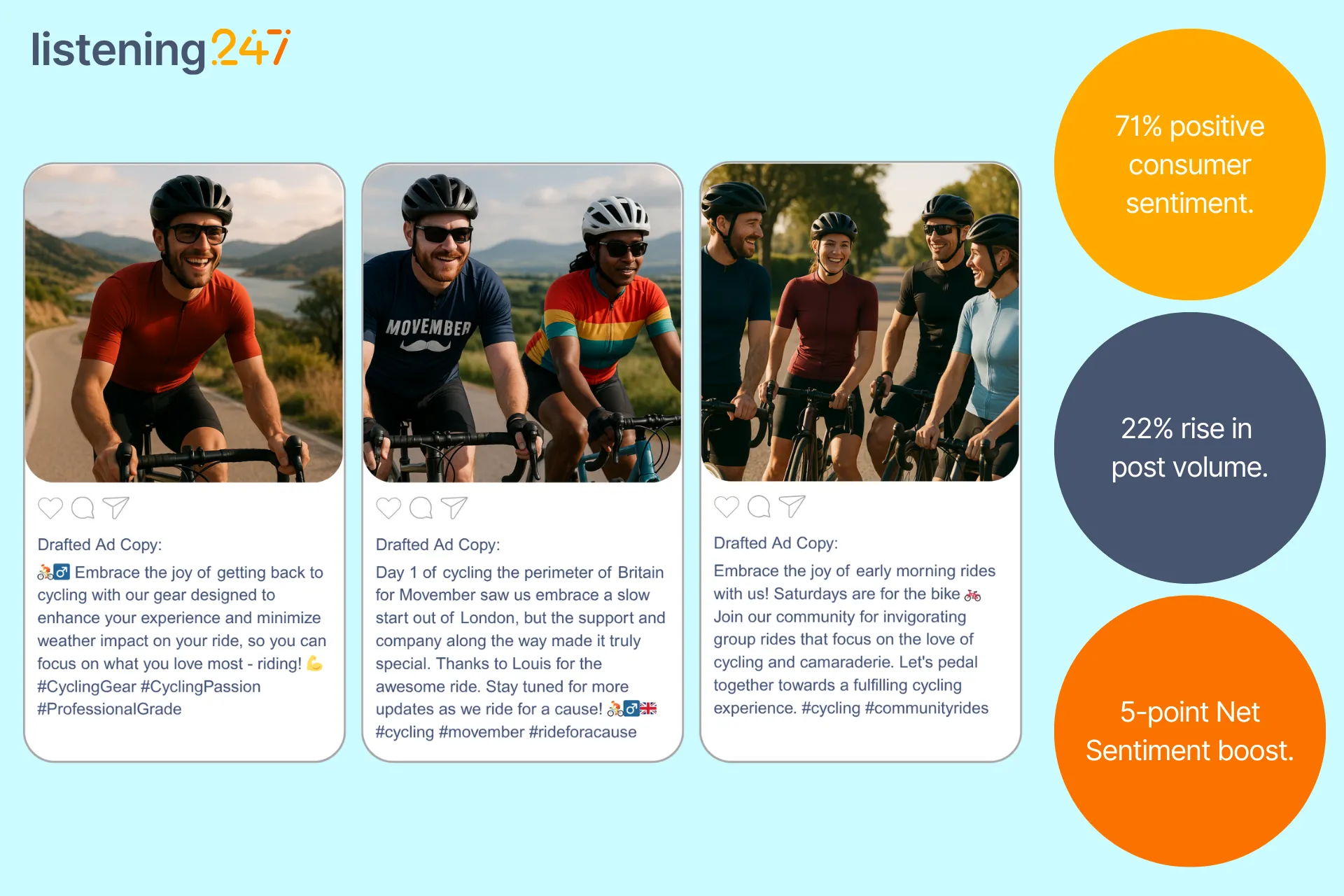

listening247 creates frequent insight reports for a cycling brand to:

Using its proprietary Gen AI model, l247

Based on identified conversations, L247 used its proprietary Gen AI model to create copy an imagery for ads:

.webp)

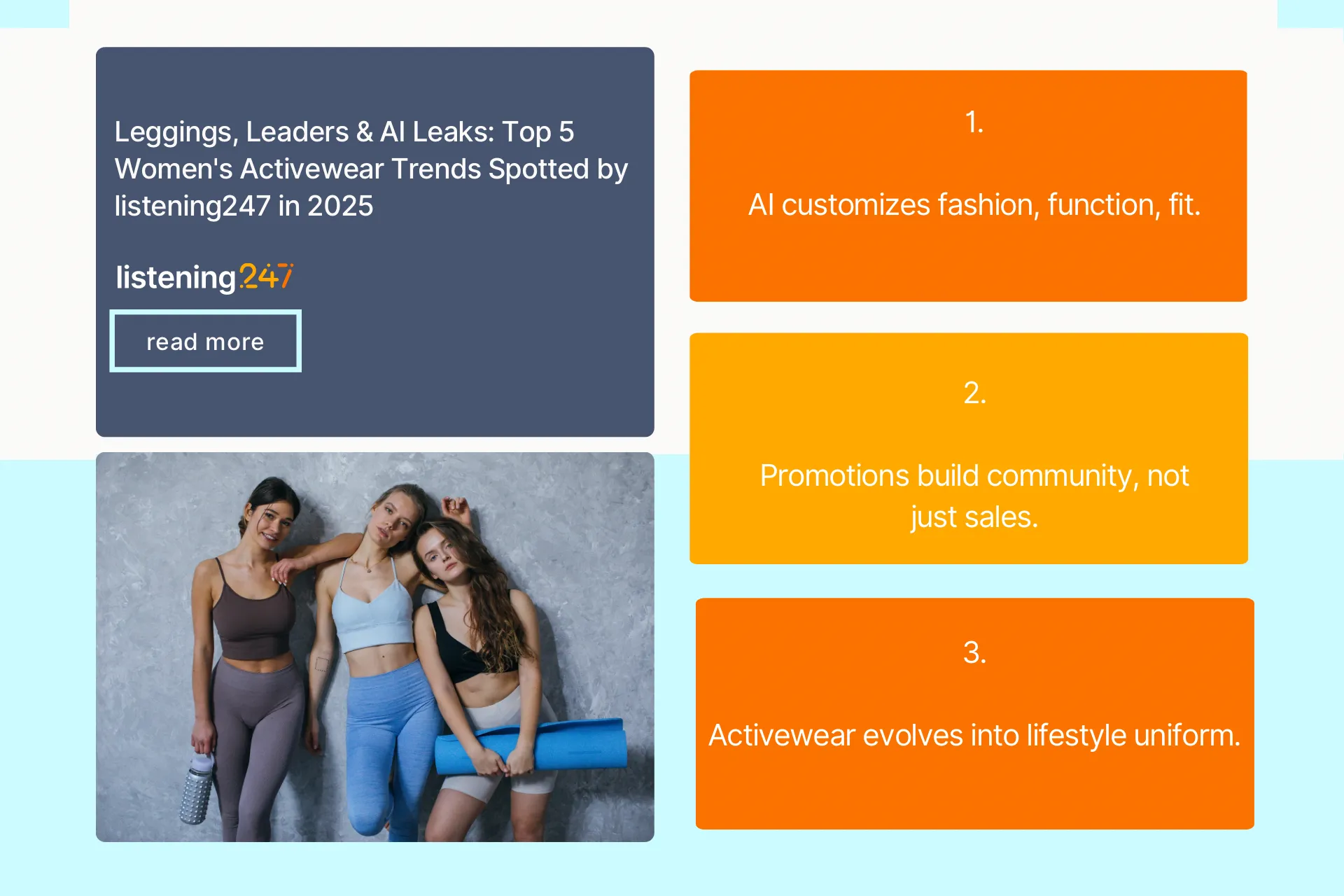

listening247’s analysis of 52,197+ conversations across Instagram, X, TikTok, YouTube, blogs, and forums reveals that in 2025, the women’s activewear industry is driven by five key topics:

Sports leads the discussion, reflecting the rise in women’s participation and their demand for performance-ready apparel, leading the way for brands to use AI to transform the industry with personalized user designs, smarter shopping, and data led marketing?

With the industry as a whole booming, consumers are embracing innovation, inclusivity, and the fusion of fashion and function; yet the presence of neutral sentiment signals an opportunity for brands to deepen engagement and build loyalty. The message is clear: consumers are invested, and the brands that stay ahead will be those that deliver comfort, tech, and meaningful connection.

The women’s activewear market is booming, set to skyrocket from $20.6B to $145.6B by 2032, driven by a surge in female participation in sports, growing health consciousness, and the rise of activewear as a lifestyle choice among millennials and Gen Z. Today’s consumers seek comfort, flexibility, and style tailored to their specific activities whether it’s running, yoga, or team sports; and brands are responding with tech-driven innovation.

AI is revolutionizing the game, enabling custom-fit designs through 3D body scanning, predictive movement analysis, and trend forecasting powered by machine learning. From adaptive fabrics to AI-generated collections, activewear is no longer just about function but rather it’s smart, stylish, and deeply personal.

Sources: (Fortune Business Insights) ; (The Brainy Insights) ; (TD Sportswear)

Activewear has evolved from a fashion trend into a full-blown lifestyle, with women leading the charge in blending comfort, style, and functionality into their everyday wardrobes. From the U.S. to China and Germany, global demand is being shaped by wellness, sustainability, and a desire for clothing that feels as good as it looks. Luxury fashion is also embracing the shift, with brands like Fendi and Versace launching athleisure lines.

Meanwhile, AI is accelerating this fusion of fashion and function by spotting trends before they go mainstream, enabling hyper-personalized shopping, and even co-designed collections mixing traditional sportswear and luxury. In 2025, activewear isn’t just what women wear to work out but it’s what they live in.

Sources: (Mintel) ; (Heuritech)

Customer experience is now as vital as the clothing itself. Brands build loyalty by blending technology, personalisation, and emotional connection, offering innovations like AI-driven recommendations, virtual try-ons, and community-driven insights. Consumers expect seamless, tailored journeys where trust, quality, and authenticity are paramount.

Consequently, the "best fit" is increasingly digital and emotional, not just physical. Leading sportswear brands, such as Nike and Lululemon, demonstrate this by harnessing AI and machine learning to deliver product suggestions and apparel explicitly tailored to individual preferences and precise fit.

Sources: (StoryStream) ; (Retail Technology Innovation Hub)

Lifestyle is the real trendsetter. Women aren’t just buying sportswear for workouts. They’re choosing pieces that reflect who they are and how they live. The demand for versatile, stylish, and comfortable clothing has blurred the lines between gym gear and everyday fashion, fueling the rise of luxury athleisure.

Whether it’s leggings at brunch or hoodies on a shopping run, activewear is now a uniform for modern, wellness-driven living. Brands are also responding to the need for eco-friendly materials by using technology to engineer sustainable materials that are durable, lightweight, yet soft and breathable.

As one of the fastest-growing segments in fashion, women’s activewear thrives on smart, high-impact promotions. Brands must go beyond basic ads by leveraging social media challenges, influencer partnerships, referral programs, and user-generated content to build awareness and loyalty. Campaigns like 30-day workout challenges or fitness-themed giveaways not only engage communities but also turn customers into brand ambassadors.

AI and machine learning are taking this further, enabling targeted ads that resonate with a consumer’s specific fitness goals and lifestyles. In this space, the best promotions aren’t just selling clothes. They’re fueling motivation, community, and connection.

Sources: (Photoshelter) ; (Opensend) ; (Digital Defynd)

Through listening247’s analysis of over 52,000 online conversations, it’s clear that the women's activewear market is thriving as it evolves from pure sportswear into a wellness lifestyle uniform. Future success is defined by the integration of AI and technology, which enables brands to deliver hyper-personalized products, compelling community experiences, and customer journeys where the "best fit" is emotional, digital, and physical.

.webp)

In peak season, the best gift isn’t always wrapped, it’s discovered through data. From nostalgia-driven gifting to AI-shaped shopping journeys, these are the seven holiday trends guiding how UK, EU, and US consumers plan, purchase, celebrate, and reset for the year ahead.

listening247’s analysis of 58,000+ public posts and conversations across X, Instagram, TikTok, Reddit, forums, and blogs, from the 2024 holiday season through today, reveals that the 2025–26 holiday season is dominated by seven themes:

Across the UK, EU, and US, positive sentiment toward the upcoming holiday season and brand-led experiences is strong, but a large neutral band signals opportunity. Brands that personalize, reduce friction, and clearly align their values with consumers’ priorities, from affordability and convenience to inclusivity and sustainability, will win both baskets and long-term loyalty.

Strategic takeaway: It is no longer enough to target broad segments; the advantage lies in targeting specific moments and mindsets across the season. Precision insight powers precision activation.

Shoppers are using AI gift finders (for example, tools like DreamGift or Genie by GiftList that recommend gifts based on a few questions), creator-curated guides, retailer wishlists, and social searches to match taste with budget.

“Dupes” (lower-priced alternatives that resemble premium or viral products, often discovered via social platforms and marketplace apps) are normalized, but quality signals such as reviews, user-generated content (UGC), and creator testing still drive purchase confidence.

Tiered gifting emerges as a key trend of the season, especially across UK and EU audiences but visible in the US as well. Shoppers are building gift “stacks” that combine one signature hero item with smaller add-ons such as practical stocking fillers, experiences (for example, tickets or vouchers), and small keepsakes.

What to do: Embed UGC and creator content into on-site recommendation widgets, such as “You might also like” or “Still deciding?” carousels on product and cart pages. Launch dynamic persona bundles such as gamer, home chef, skincare minimalist, or teen tech. Offer price-protected “gift match” guarantees to build trust.

Sources: Retail Technology Innovation Hub, StoryStream

Holiday shopping behavior concentrates at three points throughout the year:

US audiences lead Cyber sales while UK and EU see last-week click-and-collect surges.

What to do: Sequence communications by intent. Educate buyers in October and November through guides and comparisons while using Cyber week to convert into sales with transparent pricing and protection, and push convenience in late December with stock visibility, pickup options, and e-gift cards.

Sources: Photoshelter

Consumers prefer shareable, simple, and cozy experiences such as potluck dinners, themed movie nights, neighborhood light walks, small concerts, and workshops led by creators and influencers. Here “creator” can mean both social-first influencers and hands-on hosts (for example, a cooking class, crafting session, or mixology night). Social content rewards realistic, low-stress celebrations over polished perfection.

What to do: Package “event in a box” kits with playlists, décor bundles, local maps, and creator co-host nights. Provide downloadable checklists and templates for easy sharing.

Sources: listening247 social listening across TikTok, Instagram, and Reddit; creator economy analysis

Holiday food conversations center on easy prep, allergen-based food options such as gluten-free and dairy-free recipes while also staying budget-friendly. EU audiences emphasize local seasonal produce while US consumers over-index on shoppable recipe carts.

What to do: Pair recipes on your site or app with pre-built online shopping carts from your retail partners (for example, “add all ingredients to basket” with one click). Add “swap this for that” filters within the recipe or cart experience for allergen-friendly, budget, or dietary alternatives, and promote leftover ideas and storage tips to reduce waste.

Sources: Social video analysis and retail innovation coverage

Travel conversations highlight the need for flexible booking, transparent fees, and clarity on whether insurance options are worth it. Many travellers describe insurance as “worth it” for missed connections, medical issues, or disruption refunds. “Homecoming” storytelling, from small-town charm to family reunion content, performs strongly across all regions. European users specifically seek real-time transport updates amid local disruptions.

What to do: Promote flexibility upfront, provide live status updates, and curate close-to-home itineraries with local creators.

Sources: Reddit and forum analysis from listening247

Consumers are rejecting extreme resolutions in favor of small, steady habits such as 15-minute workouts, daily journaling, meal prepping, and sleep hygiene. UK and EU audiences embrace group challenges while US users lean toward app-based streak tracking and digital accountability.

What to do: Launch micro challenges with creator accountability bundles, starter kits, and develop adaptive, AI-personalized plans to track habits easily.

Sources: Behavior-change conversation analysis, Digital Defynd

Sustainability shows up as practical, not performative. Consumers embrace resale, rental occasion-wear, durable basics, and minimal packaging. Tone matters as well. It needs to be approachable and useful messaging to win consumer trust. EU discussions highlight circularity and resale, while the US maintains a focus on durability and long-term value.

What to do: Promote repair and resale programs, offer impact receipts, and provide packaging options or eco-delivery toggles.

Sources: Sustainability and fashion discourse, Vocast

Creators drive faster conversions with “I tested 5” or “what to actually buy” formats across Instagram and TikTok.

Social search is the new shopping assistant. Optimize captions and alt text for shopper queries such as “gift for dad under £50” or “last minute Secret Santa EU.”

Sentiment drivers include stock transparency, clear shipping cutoffs, authentic lifestyle content, inclusive representation, and smooth returns.

Friction points include hidden fees, late deliveries, unclear allergens, and inconsistent pricing between ad and cart.

This season isn’t about bigger lists but about smarter choices, simpler hosting, and more meaningful moments. With over 58,000 UK, EU, and US conversations analyzed across the last year, one truth stands out: when you know your audience deeply, targeting becomes effortless and performance follows.

.webp)

In alcohol, taste drives sales, but knowing your customer drives growth.

From premium rituals to mindful moderations, as dove into the habits of Gin drinkers in the UK, EU, and US, all based on public conversations across X, Instagram, TikTok, Reddit, forums, and blogs over the last 6–12 months, we identified the top 5 consumer trends shaping the Gin industry.

listening247’s analysis highlighted five dominant topics that matter to consumers for precision targeting:

Gin is uniquely positioned across all five: a canvas for mixology, a gifting favorite, a mindful-friendly base, a craft storytelling vehicle, and a creator economy staple. The takeaway is simple: the better you know your customer, what they value, when they drink, and how they discover, the easier and cheaper it is to target them.

Consumers talk about “worth it” bottles, limited editions, and gifting bundles - all which make them feel special. Gin performs strongly where botanicals, packaging, and glassware create perceived value. Clear use-cases (hosting, gifting, collecting) correlate with higher willingness to pay.

Targeting takeaways:

Source: In 2025, high-end spirits and premium mineral waters saw continued growth as younger consumers sought out quality over quantity (Forbes, 2025).

TikTok hashtag stream: #GinGifting (unboxings, bottle+glassware bundles): TikTok #GinGifting

Short-form videos fuel cocktail discovery; forums and blogs validate this by providing techniques and tips. Gin benefits from “five-ingredient max” serves and botanical exploration with citrus, herbal, floral, and tea-infused profiles surfacing as distinct flavor personas.

Targeting takeaways:

Source: According to the Brands Report 2025, gin continues leading bar menus globally, with brands like Tanqueray and Bombay Sapphire dominating cocktail lists, driven by flavor innovation and botanical exploration (Drinks International, 2025).

Blog Example: “3 citrus-forward gin serves in under 20 seconds” (short-form compilation frequently shared to IG/TikTok): A Couple Cooks – Gin Cocktails Note: long-running page, updated frequently and widely reshared in Reels.

TikTok hashtag stream: #5IngredientCocktails (simple builds, high saves): TikTok #5IngredientCocktails

Low/no-alcohol and sessionable serves are mainstreaming. Consumers want to keep the ritual without the excess. Gin-alternative bases and long G&Ts/spritzes are popular in weekday and wellness-aligned moments.

Targeting takeaways:

Source: Nearly half of U.S. consumers planned to drink less in 2025, with Gen Z leading the moderation movement, boosting low/no RTD categories, especially in gin (Forbes, 2025).

Blog Example: “Best non-alcoholic gins and long-serve ideas” (shared widely on social): The Spruce Eats – Best Non-Alcoholic Gins

TikTok hashtag stream: #SoberCurious (alternation, low/no builds): TikTok #SoberCurious

Sustainability discussions are rewarded when details are provided such as where the botanicals are sourced, regenerative practices, water/energy footprints, lightweight packaging, and refill options. Provenance storytelling, the act of communicating a product's or brand's history, origin, and journey as a compelling narrative, performs best when paired with verifiable progress.

Targeting takeaways:

Source: Provenance storytelling is central to the premiumization of gin, with brands emphasising botanicals sourced from distinct geographies and eco-friendly production (Drinks International, “Gin: Keeping It Real”).

Instagram hashtag stream: #SustainableGin (lighter glass, recycled content, energy/water claims): Instagram #SustainableGin

Promotions that educate outperform general discounts. QR-to-recipe journeys, flavor quizzes, creator-led tastings, and geo-targeted events move audiences from awareness to trial to repeat purchases.

Targeting takeaways:

Source: Consumer conversations on promotion-driven discovery highlight how education-first activations and storytelling outperform discounting (The Grocer, 2025).

TikTok hashtag stream: #CocktailQR (QR-to-recipe content flows): TikTok #CocktailQR

Instagram: #GinTastingEvents (geo-tagged tastings; UGC recaps): Instagram #GinTastingEvents

Alcohol’s growth in 2025 is shaped by consumers who want to be part of a story and simplicity. Gin is perfectly placed to win the moment: flavor forward, ritual ready, provenance proof, and creator friendly. With listening247’s AI, brands can turn noisy conversations into precise segments, so the right bottle, serve, and story meet the right person at the right time.

.webp)

What do over 9,300 social media conversations on platforms like X, Instagram, and TikTok analysed by listening247 tell us about the grocery retail industry in 2025? It’s in the midst of transformation. Technology, sustainability, and evolving consumer habits are reshaping how we shop for groceries. This blog dives into the latest trends and how retailers are using AI and automation to meet the rising demand for convenience, personalisation, and seamless shopping.

Did you know? Online grocery shopping has jumped 56% since 2022, with 22% of consumers now using delivery or pickup services.

In 2025, convenience is king. Shoppers want quick, affordable, and easy ways to buy groceries online or offline. To keep up, grocery stores are turning to tech like AI and unified checkout systems to manage inventory and prevent stockouts, while delivery services via mobile apps allow for speed and convenience. This, in turn, gives grocery brands more data to personalise the online shopping experience.

Sources: (Drive Research)

More shoppers are thinking green. From reducing food waste to offering local and eco-friendly products, sustainability is a priority. Smart checkout systems help by adjusting prices to avoid waste and highlighting eco-products. In South Africa, 64% of consumers are willing to pay more for sustainable goods. This means retailers who make green options affordable and visible have a big opportunity to lead and win loyal customers who care about the planet.

Sources: (Extenda Retail); (KLA)

Many stores are turning to automation to lower costs and increase efficiency within their stores. Self-checkouts, mobile payment options, AI inventory tracking, and even cashierless stores make shopping faster and easier. Behind the scenes, these technologies also help reduce errors. Think smart shelves that track stock and AI to predict busy times so stores can staff smarter, or even autonomous stores that run almost by themselves. Automation is not just about convenience, it’s about efficiency.

Sources: (Extenda Retail); (Scale Computing)

Today’s shoppers are seeing shopping experiences tailored just for them. Using AI and data, grocery stores can create personalized deals, targeted ads, and in-store experiences that feel unique to each customer. Stores are using data collected to better identify their ideal customer profile and tailor stocked items or promotions for that group, turning data into meaningful connections. Personalization is no longer a bonus but expected by consumers.

Sources: (IGA); (Supermarket News)

.Shoppers want to move seamlessly between physical stores, websites, and apps. Retailers are building integrated systems powered by AI to deliver consistent, personalized experiences no matter where you shop. With 73% of consumers preferring multichannel shopping, stores that don’t connect their online and offline worlds risk losing customers. AI helps by analyzing data across all touchpoints, allowing real-time personalized service and smarter marketing. The challenge? Keeping data private and using AI ethically.

Sources: (Uniform Market); (Strategy Software); (Bridg)

AI is driving the changes behind these grocery retail shifts. It helps predict what customers want, manages stock efficiently, personalizes offers, and smooths out delivery logistics. In a world where budgets are tight and shopping habits shift fast, AI enables retailers to stay agile and relevant.

With over 1 in 5 shoppers now buying groceries online, AI makes sure the experience is seamless, cost-effective, and scalable, helping stores grow while meeting new consumer expectations.

By listening247 analysing over 9,300 social media conversations around grocery shopping trends, it’s clear that the 2025 grocery retail industry is defined by five big forces: online shopping, sustainability, automation, personalization, and omnichannel experiences. Consumers want more than products, they want convenience, value, and choices that reflect their values. To win, retailers must become data-driven and customer-focused, using AI and technology not just to keep up, but to lead. The future of grocery shopping isn’t just about shelves and checkouts but it’s about smart, seamless, and sustainable experiences that connect with shoppers on a whole new level.

.webp)

listening247 creates a monthly insights report of outdoor/sports footwear for a minimalist running shoe company to:

Using its proprietary Gen AI model, l247

Based on identified conversations, L247 used its proprietary Gen AI model to create copy an imagery for ads:

.webp)

.webp)

listening247, in partnership with Double A Labs and a top film production and distribution company about the “Why and How Social Media Influences Viewership or Brand Perception.” The objective was to:

Digital Focus Groups + Social Listening

.webp)

.webp)

listening247 chose to analyse the consumer segment for biscuits to conduct analysis and create opportunity by understanding:

Using its proprietary Gen AI model, l247:

.webp)

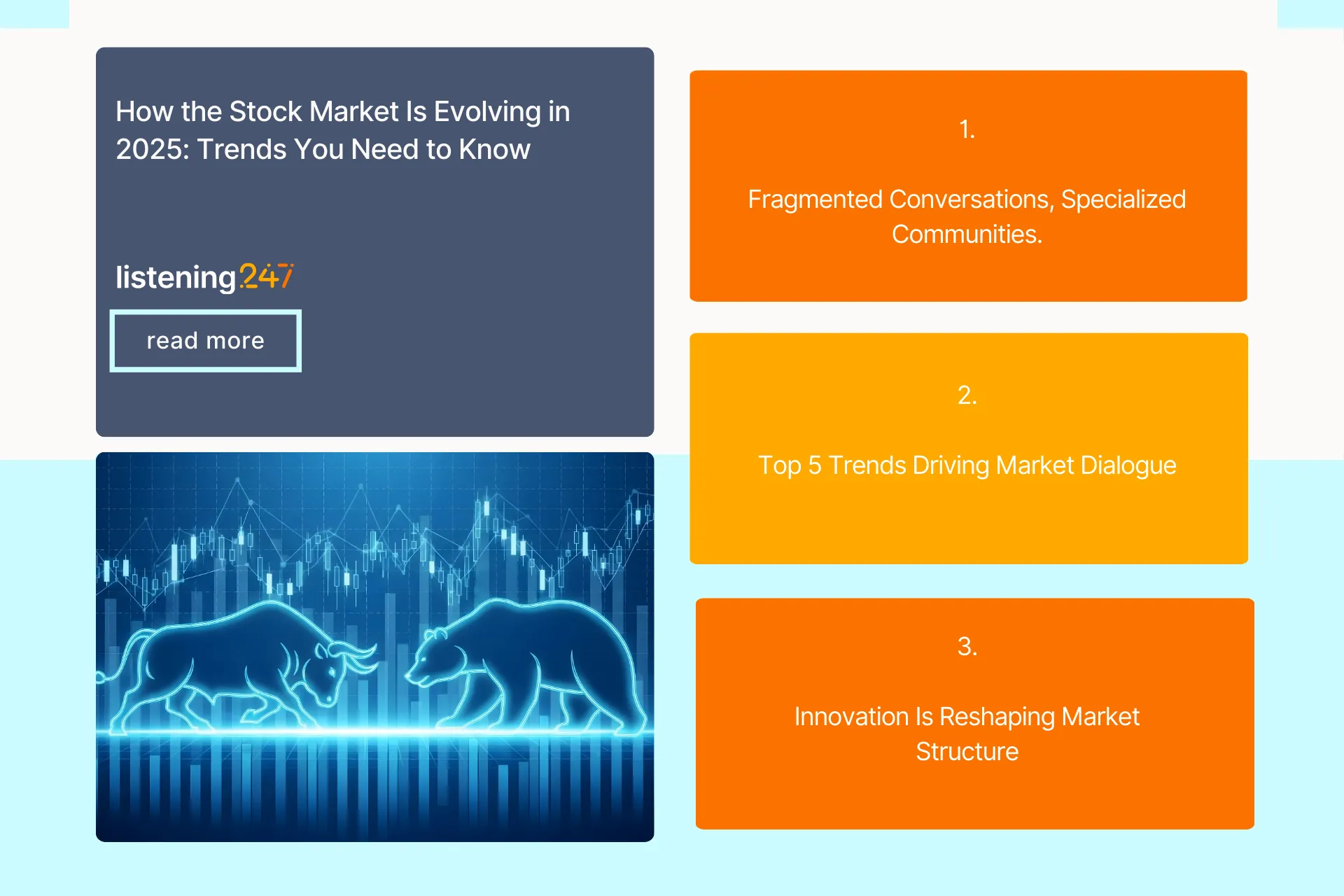

What do thousands of online conversations about the stock market reveal? The old way of doing things is gone. The market is no longer a single, unified place but a collection of specialized communities, each with its own interests. Company Fundamentals are no longer enough to discover alpha; alternative data (sentiment and buzz) from social media conversations are becoming an integral part of every hedge fund's investment strategy. To succeed, you have to know what matters to each one.

listening247 collected, processed, and analyzed over 200,000 social media conversations on X, Instagram, forums, and blogs and discovered the top (5) conversations leading the online discussions:

Surprisingly, Regulations and compliance (13,225 posts) and Technology and innovation (13,099 posts) were further down the list than expected.

Why is this valuable? The data reflects a growing fragmentation of the market's social dialogue which suggests distinct communities, each with its own set of concerns and interests. The stock exchange is evolving into a collection of interconnected but specialized markets, each with its own dedicated audience that should be monitored to ensure each niche is being recognized to ensure growth.

People are talking about new ways to invest as the securities exchange market is poised to grow by $56.67 billion by 2029. Things like digital assets and sustainable investments such as ESGs, are becoming a big deal. It's not just about getting a good return; it's about making a positive impact. But with innovation comes new risks. Market volatility is rising, pushing gold prices up and making debt more fragile.

Sources: (SA Reserve Bank); (technavio.com); (easyequities.co.za)

The trading market is faster and more accessible than ever. Automated trading and smart algorithms have made it easier for everyday investors to get involved. Growth stocks, small-caps, and ETFs are in the spotlight as investors look for strong returns. But being fast isn't enough. The key to success is having a long-term strategy and a diverse portfolio.

Sources: (Trading Bells); (The Recursive); (itbfx.com)

Listing a company on an exchange is no longer a local affair. With 62% of U.S. stock exchange listings now originating from foreign issuers, this shows the market is interested in deep liquidity and investor access through the NYSE. Geography now matters less than the value proposition an exchange offers.

New private markets, like London’s Pisces and Kenya’s micro-share trading, are also emerging, offering more ways for companies to raise money and a wider variety of options for investors.

Sources: (ey.com); (monkeyweek.com); (jse.co.za); (linklaters.com)

As everything moves online, a new community focused on security is growing and needed. Threats from hackers and sophisticated cybercriminals are on the rise exploiting weaknesses to advance geopolitical goals and challenge the security of stock exchanges.

This has led to an increased demand for stricter regulations, software security measures, and educating of consumers to better defend and keep financial systems safe.

Sources: (mofo.com) (jpmorgan.com)

Crypto is no longer on the outside looking in. Things like tokenized stocks offered by Kraken, have blurred the lines between crypto and traditional investing, making it possible to trade assets like Apple and Tesla 24/7 across borders. This enables a level of global participation and liquidity that legacy exchanges were never designed to handle leading to regulators, such as the SEC and CFTC, racing to build the scaffolding and infrastructure to blend the agility of crypto with the stability of traditional finance.

Sources: (reuteurs.com); (reuters.com); (forbes.com); (financeworld.io)

While the above five topics dominate the conversation, two other trends are quietly driving big changes. AI technology is now a core part of the market, helping to spot fraud and create personalized investment plans.

Looking ahead, AI will likely reshape the very architecture of stock exchanges. Expect to see AI-regulated trading platforms, smarter market surveillance systems to detect fraud and manipulation, and more personalized investment strategies driven by AI-powered robo-advisors. Exchanges may adopt AI to improve settlement efficiency, reduce systemic risk, and automate compliance. However, as AI grows more influential, regulators will face a tough balancing act: ensuring transparency, accountability, and fairness in a world where machines might understand the market better than we do.

The stock market of 2025 is a mix of traditional finance and new ideas, each with their own distinct communities and needs for continued growth and expansion. By reviewing over 200,000 posts, we see the market being reshaped by digital assets, faster trading, and a surge in cross-border listings. This evolving landscape blends traditional finance with innovation and global connectivity, while creating new opportunities and challenges for investors and regulators alike.

To succeed in this new landscape, it's essential to not only understand the broad trends but also actively listen to and engage with the unique concerns of each specific community, transforming challenges into opportunities.

Facing rising costs and shifting consumer needs, an insurance leader turned to a private online community to unlock real-time insights and drive smarter growth.

A leading insurance company was faced with the challenge of creating educational material that addressed the concerns, whilst guiding the right consumers towards selecting the right type of insurance. The company needed a solution that was both quick and cost-effective to discover insights about the insurance industry and achieve effective communication with customers.

To address these challenges, listening247 introduced an innovative solution: a private online community platform designed for the insurance company to gain a better understanding of customer perspective when it comes to its products and services. This platform facilitated real-time interactions and allowed the company’s necessary departments such as the marketing and research team to conduct various activities such as bulletin board discussions, surveys within the community and video diaries.

1. Efficient Decision-Making: The customer quickly gathered initial input from consumers, which guided crucial marketing choices, ensuring they maintained a competitive edge in the market.

2. Enhancement of Economic Strategy: The online community platform minimised total market research expenses by delivering valuable insights, thereby optimizing the return on investment for marketing expenditure.

3. Enhanced Consumer Engagement: Consistent interaction with the consumer base via the platform bolstered brand loyalty and offered more profound insights into the consumer experience, leading to enhancements in marketing strategies and product offerings.

To stay ahead in the competitive personal care market, a global blue-chip organisation sought to deeply understand consumer perceptions across six diverse countries. Facing the challenge of capturing nuanced attitudes in multiple languages, they partnered with listening247™. Leveraging advanced AI-driven social intelligence, the initiative uncovered real-time, high-accuracy insights that informed strategy, enhanced campaign effectiveness, and aligned offerings more closely with consumer expectations.

A global blue chip organisation need to explore the landscape around a personal care category in 6 different countries to discover consumer perception and attitude towards their product. They goal was to thoroughly investigate consumer perceptions and attitudes around the personal care category to better inform future marketing campaigns.

To tackle these obstacles, listening247 adopted an all-encompassing social intelligence strategy employing sophisticated AI models. This method entailed gathering data from various digital platforms that covered user posts on public websites in 6 different languages including German where the sentiment accuracy at sentence level was 91%. The data was then carefully harvested, followed by the process of noise elimination to remove irrelevant posts. This data underwent meticulous annotation, detailing information on brands sentiments and topics.

1. Dynamic Market Reactivity: Utilising the listening247™ platform enabled the client to monitor shifts and adjust promptly, thereby sustaining a competitive advantage.

2. Enhanced Marketing Strategy: The results were leveraged by the client's advertising agency to craft upcoming campaigns.

3. Better Market Alignment: The provided insights enabled the client to synchronise their offerings with consumer preferences, thus improving customer engagement.

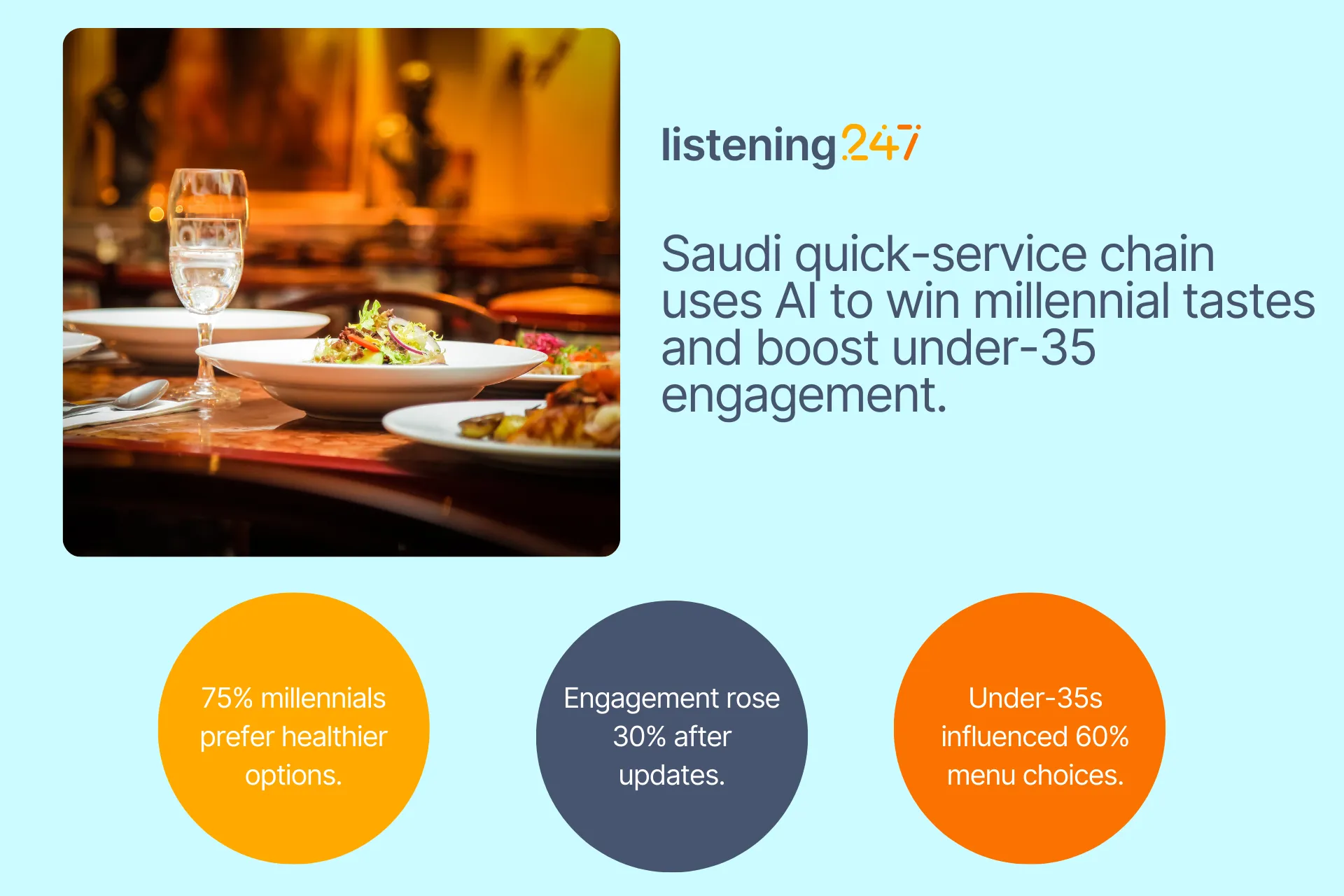

A leading Saudi quick-service chain faced the challenge of decoding under-35 consumer trends from vast multilingual data. Using advanced AI and three months of digital insights, listening247 helped tailor menus that boosted relevance, engagement, and informed decisions.

A leading quick service restaurant chain in Saudi Arabia needed to grasp the consumer trends among the under-35 demographic. The challenge was to sift through extensive multilingual digital content from various platforms to extract precise, relevant data.

To address these challenges, listening247 implemented a robust methodology involving the collection of three months' worth of historical data across key digital platforms, using generic keywords to capture a broad spectrum of consumer behaviour and preferences. Advanced AI models were employed to annotate the data with sentiment and relevant topics.

1. Informed Decision-Making: The insights allowed for data-driven adjustments to the restaurant's menu.

2. Enhanced Market Relevance: Understanding young consumers' preferences enabled the restaurant to better align its offerings.

3. Improved Customer Engagement: The strategic updates led to higher engagement and satisfaction from the crucial under-35 market.

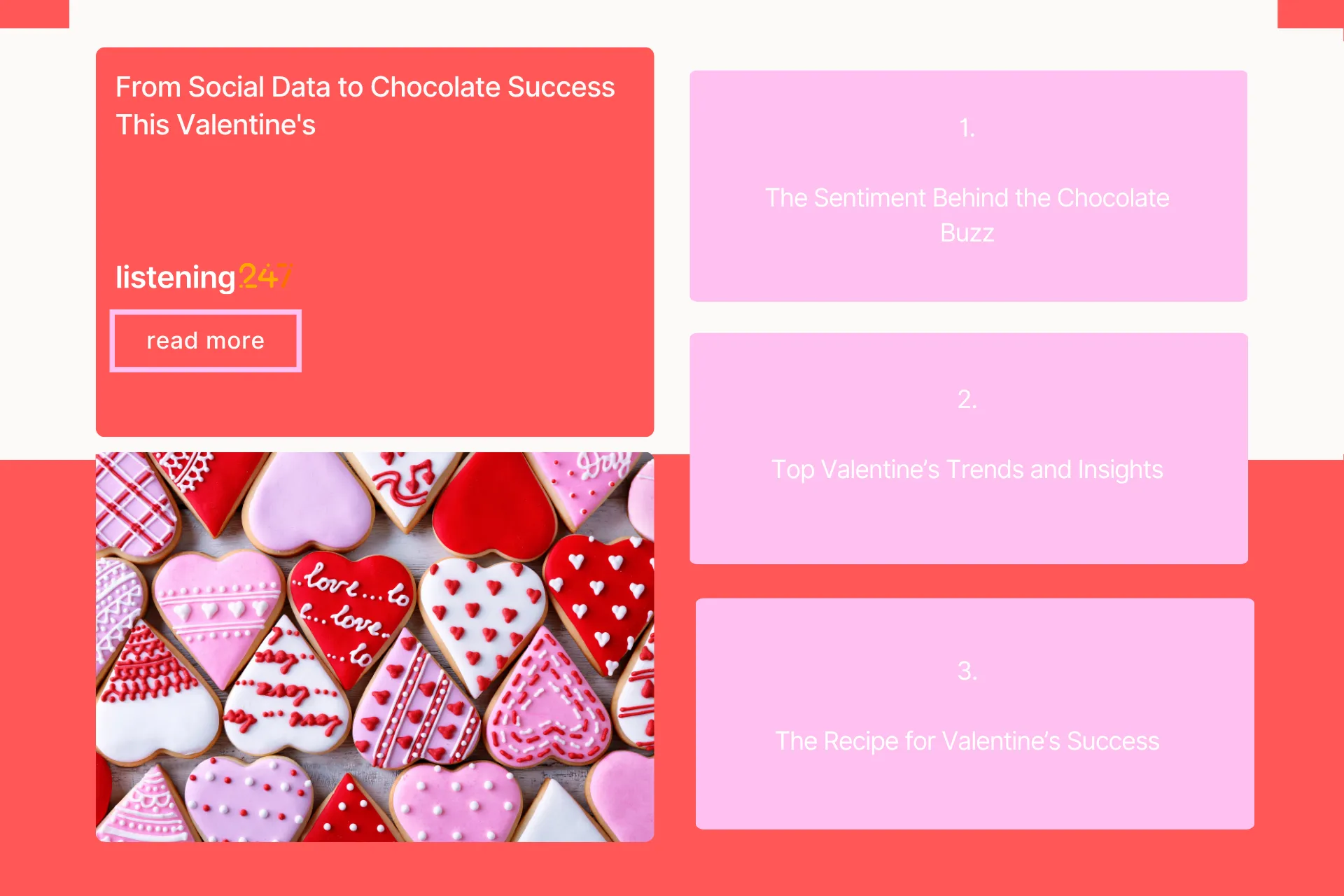

With Valentine’s Day on the horizon, the chocolate industry is in full swing to make their mark and drive sales and brand engagement. Using listening247’s Social Listening and Analytics, we analysed 24,930 posts from Instagram across multiple regions and languages, including English, Italian, Korean, and Indonesian. Our goal? To uncover the most influential conversation drivers around chocolate, love, and seasonal promotions.

Among the posts analysed:

If there’s one thing chocolate lovers adore more than indulging, it’s winning free chocolate. Giveaway promotions dominated the conversation, accounting for 14,884 of the total posts analysed. Brands like That’s It and Choczero sparked engagement through interactive contests, encouraging users to tag, share, and follow for a chance to win exclusive treats. Lindt and Baci Perugina took things further, tying their giveaways to limited-edition Valentine’s chocolates, ensuring their brand stayed top-of-mind as shoppers browsed for the perfect gift.

Takeaway: Giveaways don’t just create buzz; they build brand affinity and amplify visibility across social media. Tying contests to seasonal events maximises impact.

Valentine’s Day isn’t the only reason people talk about chocolate; seasonal occasions accounted for 6,085 posts, reinforcing how deeply chocolate is woven into celebrations. Brands like Lindt and Baci Perugina successfully capitalised on holiday excitement with heart-shaped boxes, themed promotions, and limited-edition releases.

Beyond promotions, people are passionate about their chocolate preferences. 3,801 posts discussed chocolate types, from dark and milk varieties to unique flavours. Discussions on discontinued favourites like mango and cream truffles gained traction, highlighting opportunities for brands to reintroduce nostalgic flavours.

Takeaway: Nostalgia sells. Revisiting past favourites or launching limited-edition throwback collections can rekindle consumer excitement.

Valentine’s Day-specific promotions accounted for 807 posts, with Lindt’s Pick & Mix selections and Baci’s signature love-note chocolates standing out. While consumers embraced these festive offerings, some concerns emerged around pricing. A Valentine’s loyalty programme could be a strategic move to balance premium appeal with affordability.

Takeaway: Limited editions fuel demand, but pricing strategies should ensure accessibility without compromising brand value.

With 493 posts, chocolate emerged as more than just a treat; it’s a symbol of affection. Baci Perugina’s multilingual “Love Note” campaign was a standout, adding a personal touch that deepened emotional connections. Lindt’s Pink Mixed Bar Bouquet and Lindor chocolates, often paired with roses, reinforced the role of chocolate in heartfelt gifting.

Takeaway: Thoughtful packaging and personalised messaging enhance emotional appeal and gift desirability.

Although less frequently mentioned, events and gifting traditions made their mark, with 275 posts discussing chocolate’s role in group celebrations and gifting culture. The Valentine’s Chocolate and Wine Walk was a particular highlight, proving that immersive brand experiences leave a lasting impression.

Takeaway: Experiential marketing, such as chocolate pairing events, can deepen consumer engagement beyond traditional advertising.

Valentine’s Day remains a key moment for chocolate brands.The top-performing strategies? Giveaways for engagement, personalised packaging for emotional appeal, and nostalgia-driven product revival to spark consumer excitement. Brands like That’s It, Lindt, and Baci Perugina demonstrated how interactive campaigns and thoughtful promotions can turn seasonal shoppers into lifelong customers.

As brands prepare for the next big occasion, one thing is clear: chocolate is more than just a treat; it’s a storytelling tool, a memory-maker, and the ultimate symbol of indulgence and love.

.webp)

As one would expect, social media intelligence (or just social intelligence) came up as a subject at the “Social Intelligence World” conference in London back in November 2018. More specifically, it came up in the context: how does it differ from social media listening?

This question took me back several years, when we published our first eBook about “web listening”, our label of choice at the time which was a buzzword; its most popular version was “social media monitoring”. Social media intelligence did not come up at all back then, albeit in hindsight it is odd that it didn’t. I am not sure how we missed it then, but now, when someone asks what is the difference between intelligence and listening, the answer seems quite obvious!

Social media listening or social media monitoring is simply about harvesting the online posts and maybe even annotating them with a topic and/or sentiment. If the annotation is accurate then it answers questions like ‘what are people talking about online’ or ‘how do they feel about my brand’? Social intelligence on the other hand, is about understanding the deeper meaning of what people choose to post - although sometimes there isn’t one - and link it to a business question; notice how the term ‘actionable insights’ has not come up yet? Another buzzword that is overused in the market research sector, and another one for which we published numerous blog posts with our own - very concrete - definition of what it really is!

When we say ‘social media’ in this context we don’t just mean social media platforms, but rather any public online source of text and images which might express consumer or editorial opinions and/or facts. A side note: things would be a lot easier if we meant what we say in a literal way. People who coin phrases or titles or headings tend to take a lot of freedoms on the altar of “crispness” or “snappy creativity”!

listening247 - an aspiring state-of-the-art DIY SaaS looks at the world of social intelligence via four lenses:

We would be remiss if we didn’t mention text and image analytics as a standalone discipline when the source is not social media or other online sources. In such a case the only difference is that the source is not the online web but any other source of text and images. Perhaps if the source is not the online web it should just be called Business Intelligence, which is an old and very familiar discipline within organisations.

Back to the 4 modules, they have the power to generate intelligence derived from unstructured data - which make up 80-90% of the human knowledge, produced since the beginning of time. Structured data which are effectively numbers in tables or graphs only account for 10-20% of all our knowledge as a species.

Unstructured data can be harvested from the web and if we want to stay out of jail we will stick to public data (as opposed to private conversations or personal data). They can be harvested through APIs that the sites which contain the data make available for pay or for free, and through scrapers which can crawl a website and find specific consumer or editorial posts. Responses to open ended questions in surveys, transcripts of focus groups or even call centre conversations are also great sources of opinions and facts (i.e. unstructured data).

In order to make sense of big unstructured data, machine learning is a good place to start. Supervised machine learning requires humans to annotate a big enough sample of the available data. The annotated data-set is then used to train a machine learning algorithm to create a model that does a specific job really well; the aim is to get over 80% relevance, precision and recall. Unsupervised machine learning is making great strides but cannot replace the supervised approach currently.

Once we have a trained model and our data-set we need to process the latter and annotate it in its entirety. The data can be filtered and navigated in many ways. Structured data can be produced in the form of tables, making the analysis of the data-set possible. The goal here is of course to enable human analysts to uncover actionable insights - since machines are not there yet.

Data visualisation is typically done on dashboards or PPT presentations. The most appropriate types are drill-down and query dashboards. There are multiple delivery mechanisms and use cases, e.g.

Social media intelligence has multiple use cases for multiple departments as shown in the list below, annotated as multipurpose ‘intelligence’ or specific ‘actions’:

The many departments involved and the many use cases ultimately create a confusion as to who the owner should be within an organisation. Maybe Social Intelligence should simply be part of the Business Intelligence or the Market Research department, offering custom user interfaces to the various action players with only the information they need specifically to take action.

Having a Business Intelligence or Market Research Department is a privilege reserved only for large organisations. For small and medium enterprises (SMEs or SMBs) that do not have a business intelligence department a different approach and possibly nomenclature should be employed; but this is the stuff for another blog post. In the meantime let us know where you stand on all this by emailing us or tweeting to @listening247AI.

.webp)

Without a doubt it pays to be data driven. McKinsey Global Institute reports that data-driven organizations are now 23 times more likely to acquire customers, 6 times as likely to retain customers, and 19 times as likely to be profitable as a result.

Some organisations have business intelligence and market research departments, and many others don’t. Those who don’t are typically driven by the decisions of their senior management. What this means is that sometimes a handful of people work together to arrive to a consensus decision, and sometimes a single person - the CEO or the Head of a Department - makes a call based on their judgement alone. Unless their name is Steve Jobs or Jack Welch (well known autocratic leaders who got more things right than they got wrong), chances are their judgement or intuition or gut feeling (call it what you like) will not get them optimal results.

CEOs come and go, some have great intuition, some less so. Some are extrovert and some are “level 5 leaders” to use a definition from Jim Collins’ book ‘Good to Great’, the sequel to ‘Built to Last’… which by his own admission should have been the sequel.

HBS Professor Michael Tushman says so. Being data driven encourages a culture whereby gut feelings and anecdotal information do not carry a lot of weight.

There are many sources and types of data. There are structured and unstructured data (such as text, images and video clips). There are facts and there are opinions. We can get opinions by asking questions in surveys and focus groups, preferably through online communities* or by analysing unsolicited opinions using social media listening, social intelligence or social media monitoring, however you prefer to call this new discipline. And then we have our own data from accounting; sales, profit, expenses; you get the idea.

If all these data are available to all employees and everyone’s goals (including the CEO’s) are measured using these data, then we get public accountability through transparency. This point alone is enough reason for a company to decide to become data driven!

*Did you know you can create and fully customise your own online community? Start your cost and commitment free trial.

4. Fast & confident decisions:

When a business decision is based 80% on data and 20% on gut feeling then it will be fast and confident. Companies that take a long time to debate and decide on something, and then even longer to execute are overrun and crushed by their competitors.

When decisions are not based on mood and appetite but on data they tend to be consistent and inspire stability to all stakeholders.

Curiosity is a vital characteristic of innovative people. Data availability allows the curious to find answers to questions. The more the questions and answers the more the successes.

Abundance of data on its own will not do the trick. We need people to turn the data into information, then into knowledge and then into insight and hopefully foresight.

Talking about foresight, predictive analytics is what sometimes produces it. Without data predicting anything becomes a shot in the dark.

Becoming a data driven organisation is not possible from one day to the next. We need data, ways to analyse it and a data hungry culture with people that are data literate and buy into the concept. It takes commitment from the CEO and the management team and it takes perseverance. Unless there is objective data that supports a decision, regardless of how much we think we know what action to take, we should resist to take it and we should always ask the question: what data supports this decision?

.webp)

Yes!

I thought I should get the answer to the title question out of the way, not that it wasn’t obvious what the answer would be. I violated one of the cardinal rules of market research in this case and asked a biased question. Having said that, let’s use a methodical approach to prove that this answer is indeed the correct one. Let’s start by first considering what market research is, and what it is not.

Market research is:

Market research is not:

Small and medium sized enterprises (SMEs) do not have market research departments; they often don’t even have one single employee dedicated to market research. Why do you think that is? In my view, it’s probably because they believe that other investments closer to sales are needed more than market research. Of course this doesn’t mean that their marketing department won’t buy the occasional syndicated research report or even commission some custom research every now and then.

According to OECD a small enterprise typically generates up to 10 million euros and a medium enterprise up to 50 million euros of annual turnover. Companies even larger than that don’t have market research departments. If I had to guess I would say a company would have to be over 250 million euros for a market research department to be the rule rather than the exception.

Consequently we have two types of companies to consider:

It is safe to say that all blue-chip multinationals belong to group A. Most of them treat market research with respect, especially the FMCG manufacturers. P&G is probably the biggest market research spender in the world. Their ability to swiftly turn information into action is legendary. I will venture say that this is one of the main reasons they are the biggest FMCG company in the world. They are an insights driven organisation through and through.

The rest of the organisations (that belong to group B.) in most cases have the marketing department deal with carrying out or buying market research when they need it. If they have access to lots of data they may give it to the Business Intelligence department (if it exists that is) which is more about analysing owned data and not collecting new - especially customer opinions. Now within a marketing department, depending on company size, we have a CMO or Marketing Director, and the rest of the positions and functions are all over the map: Brand Managers, PR Managers, Social Media Managers, Digital Marketing Managers, Communications Managers (internal and external).

For all the things that market research is and is not, every person in a marketing department - all things being equal - would prefer to be called something other than a ‘market research manager’. A market research manager is not on the front line heroically battling competition helping the organisation sell more… they are an ancillary service in the absence of which the heroic marketing employees will make decisions based on their experience and gut feeling. Without data, some will get it right a few times and they will make sure it is known by everyone and will be celebrated; in most cases they will get it wrong or not entirely wrong but without great results, and they will find ways to explain it away (i.e. shove it under the rug) and move on. In such occasions market research is actually the enemy because it can show exactly what the marketers did wrong, or even worse for them it can show why they should not have launched that campaign or change that product messaging or package. The market research method they should have used is called pre- and post campaign evaluation. It can be carried out using social intelligence and online survey methodologies.

Last November I was speaking at the first social intelligence conference of its kind - probably in the world. It took place in London and it was about social media listening and how to turn the findings into useful intelligence. A few of the pundits represented the opinion that social intelligence should be its own discipline and not be part of the insights function (the slightly sexier way of saying market research). When I asked why, the answer was: no-one in a marketing department wants to be called a market research manager…..thus “market research” are dirty words for marketers; case closed. I would love an opportunity to discuss with you, the readers of this post, if you have other thoughts on this subject or (even better) if you are in agreement. Please write to me on Twitter @listening247AI or send me an email.

.webp)

.webp)

Article titles are very important, they can make or break an article, so I usually consider multiple before I choose one. Here are the ones that did not make it this time:

On to our topic, there is a phrase I first heard from a friend in Poland - who probably got it from Arthur H. “Red” Motley or Peter Drucker (the famous business author), or even IBM’s Thomas Watson: “nothing happens until someone sells something”. These were all business people and they obviously meant this phrase solely in a business context, but I think the phrase is true in a much broader sense.

Think about it; if you are a kid you sell how much you want that toy to your parents, if you are a teacher you sell the importance of education to your students, if you are a priest you sell your religion to your community, if you are a politician you sell your plan to the voters... you get the idea. “Selling” goes beyond trading products or services for money. So when Drucker says “nothing happens...” it looks like literally nothing happens; these business gurus have elevated themselves to deep philosophers by sharing this universal truth with the world, probably unknowingly.

This article is about generating good sales leads that easily convert to a sale. It is about finding leads deep in the sales funnel, ideally just one small nudge away from buying.

I usually try to provide some structure to make it easier to scan and decide what to read in more detail; In this piece I will describe the lead sources, then talk about the lead generation process, spend some time on conversion and finish off with how purchase intent on social media or on the public web works.

At the highest level there are two types of leads:

People often refer to inbound lead generation as “pull” marketing; in other words the lead finds an offer and proactively reaches out to a sales organisation inquiring about the product or service with the intent to buy. An inbound marketing plan involves great SEO (search engine optimisation), SEM (search engine marketing, otherwise known as paid search), affiliate marketing, brand ambassadors - ranging from nano influencers to celebrities, as well as other types of advertising (online and offline) and digital content sharing. This of course applies to online leads, the 100% offline purchase path is simpler: watch a TV ad, go to a brick and mortar store and buy the product.

Outbound lead generation, also known as “push” marketing, involves reaching out to the prospects with an offer, whether using email campaigns or cold calling; I personally prefer warm calls. Companies usually use their own CRMs to contact existing clients and leads, they may buy lists of possible leads who consented to being contacted, or they may even hire companies that already have access to relevant leads and pay them to contact them with their offering.

The cool way to say lead generation process: Lead Gen. Incidentally, I recently learned from a much younger person that it is not cool to say ‘cool’ anymore. Go figure.

The lead generation and conversion process is simple:

Admittedly finding, qualifying, and contacting a lead is the easy part; the difficult part is to nurture the lead and actually sell something.

I have seen claims that between 7 and 11 touch-points are needed to go from an unaware lead to a converted one to a customer. This is why multi-channel marketing makes a lot of sense.

Imagine a B2B lead receiving a cold email with an offer from an unknown company; they don’t open the email but the subject line and the company name sort of registers in their mind. Then on the same day a sponsored post appears in their Facebook newsfeed - now they are trying to remember why this company name is familiar; when the same post appears in their LinkedIn and Twitter feed they start wondering which company this is, and what they do exactly. Up until this point we have four touchpoints and counting. A week later they receive another email from the same company, only now they actually open it because they are curious… ’these guys are everywhere’ is the dominating thought in their mind. Touchpoints six and seven are articles that come up when the prospect “googles” the company name. In case you are wondering there is no magic in appearing in your leads’ social media feeds; it’s all a part of the advertising options each platform offers. All you need to find and target them is the email address of the lead (which you should already have if you included them in your email campaign) that will be matched with the email address they used to sign up.

This lead was nurtured to the point that it now becomes an inbound lead when they land on let’s say the listening247 website and request a free online consultation. From then on, a request for a proposal is solicited, one is sent, negotiated and closed. Job done!

A typical path to purchase or sales funnel starts with awareness, then interest, followed by consideration, intent, evaluation and purchase (see Fig. 2 below).

Google search, which is considered a source of qualified and mature leads, may indicate interest or consideration on behalf of the person searching. Both funnel stages come before purchase intent, and thus if there was a way to identify all the leads who intend to buy from a product category before intent is explicitly expressed, it brings us a big step closer to completing a purchase. The deeper we go in the sales funnel the more difficult it is to nurture and convert leads to the next stage; this is why if we can find a lead expressing purchase intent online it saves us tonnes of money and resources needed to nurture them from awareness to the next point in the funnel.

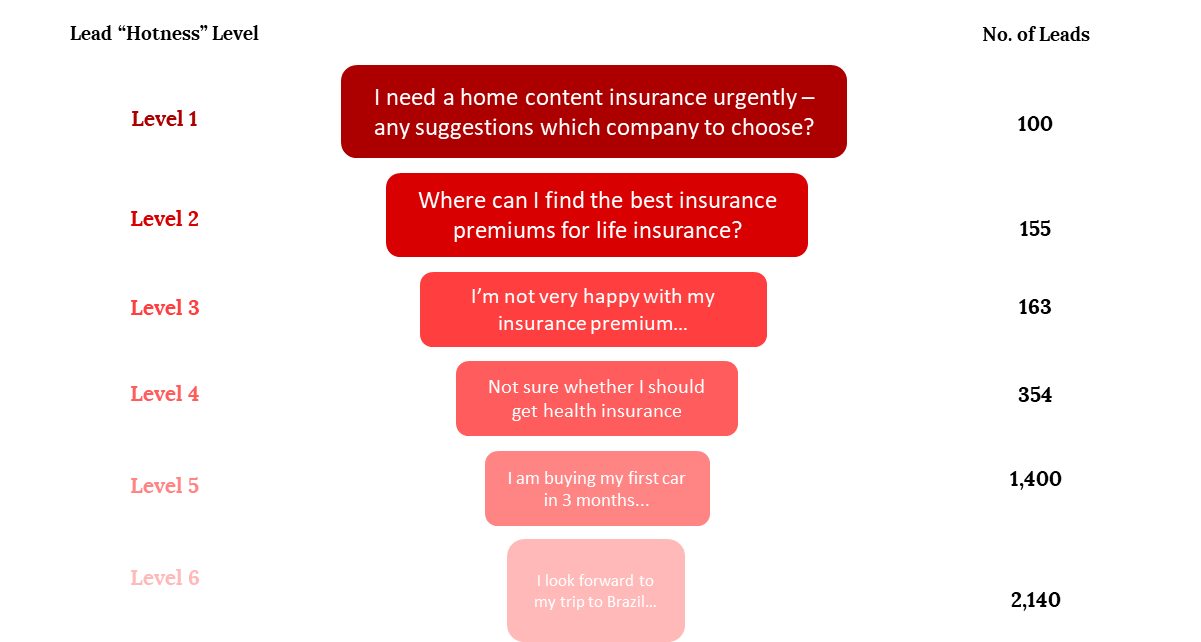

All you need is a social media listening tool that can accurately find people who express purchase intent online and has a machine learning capability to score the leads appropriately (see Fig. 1). Here is how it works:

As ever I am keen to engage in a conversation with you to compare notes, answer questions and ask some as well. Do contact me @L247_CEO or by email.

.webp)

The missing link in CX measurment is...Social Intelligence!

CX stands for customer experience for those of you who are not familiar with the acronym. There are more related acronyms that are sometimes used interchangeably: EFM (Enterprise Feedback Management), CEM or CXM (Customer Experience Management or Measurement). Measurement happens first, management follows. Titbit: managing the experience without measuring it first is like driving a car in complete darkness.

Forbes says that customer experience is the "cumulative impact of multiple touchpoints" over the course of a customer's interaction with an organisation.

A Wikipedia definition for EFM is: “Enterprise feedback management is a system of processes and software that enables organizations to centrally manage deployment of surveys while dispersing authoring and analysis throughout an organization…

…Modern EFM systems can track feedback from a variety of sources including customers, market research, social media, employees, data collection, vendors, partners and audits in a privatized or public manner.”

This article is definitely about modern EFM systems, and the main point here is that it is not enough to use surveys as the operative word in the EFM definition above.

There are many sources for customer experience feedback but they can be classified in three main groups; these are data from:

The 3rd group is what we refer to as Social Intelligence.

The mainstream customer experience measurement vendors focus on surveys after each experience type; the really good ones also measure visits or sales, recording feedback on digital kiosks, using call centers, chat apps and even face to face interactions to record, measure and integrate.

Here is what the excellent ones do: they do a 360 degree measurement by including and integrating social intelligence on top of everything else. Although social Media is mentioned as a source of information by most, it is very rarely included in a customer experience measurement program. When it is actually included, it is limited by their language analytics capability, as the few tools that do this can only carry out text analysis - for sentiment in particular - in English, or translate another language into English and then annotate the data with sentiment. Most importantly, when they offer it their sentiment and topic accuracy is lower than 60%.

EFM is another case of disruption of a very specific part of market research: the stakeholder assessment. Unfortunately, the market research sector has been very slow in adapting to change, with the result being that tech companies have mushroomed in the areas of DIY, Social Media Monitoring, Mobile and EFM/CXM.

An organisation cannot replace their customer loyalty and employee engagement programmes (run by a market research agency), with a flashy software platform from one day to the next. Our suggestion to users is to always ‘connect the dots’ to combine multiple sources of information, i.e. “marry” state of the art technology with experienced analysts and data scientists; only then, can true insights be synthesized. A machine cannot do that on its own - even if the best machine learning algorithms are employed, utilising the best methods of predictive analytics.

Some multinational market research agencies that decided to fight back for what has been theirs are Nielsen, Ipsos, Maritz and Kantar. The tech companies that are leading the push and growth in this sector are the likes of: CloudCherry, Medallia, Qualtrics, Evaluagent, Usabilla, Aptean, Critizr, Verint and so on.

Where should all the feedback from all the different sources “live” so that it can fulfill its destiny? Its destiny being to drive customer commitment and loyalty that is. Typically it should “live” on an online dashboard. Is it straightforward to integrate social intelligence to surveys? Nope. It takes a good thorough understanding of how customer satisfaction/loyalty and experience surveys work alongside the unsolicited online posts. Simple things are misunderstood and lead to confusion if the vendor is not experienced in all data sources. For example, someone who posts online is labelled as a “respondent” and their post is labelled as a “response” - which implies there was a question to begin with, when it is rather about an author and a post expressing an unsolicited opinion or fact.

Data integration happens at multiple levels:

The feedback delivery mechanisms vary and it is best to use a combination of the following:

Everything described in this article boils down to one idea: delight is the sought after customer experience by the customers and the service organisations alike. It is rather simple when you think about it: understanding what the customer wants, needs and likes is a precondition to delight; without social intelligence an important piece and multiple experience touchpoints are missing from the full picture. As always please do reach out with your own feedback on X or by email.

.webp)

This may not be a 100% original idea. Other people have thought of a version of it in the past, like the Russian news site City Reporter. The site brought positive news stories to the front of its pages and found any and all silver linings in negative stories - “No disruption on the roads despite snow,” for example.

Nevertheless, we posit that launching a news channel that will only report good news will have a positive impact on humanity. It’s all in the execution. The same idea can be executed well or really badly... if in the case of City Reporter it was the latter we should give the idea another chance.

Here is an open invitation to the powers that be in the news industry: the CNNs and the BBCs of this world to consider a global initiative and launch a TV and/or online News Channel that will only report the good news, and ignore the bad ones. We are not suggesting spinning the bad news to make them sound like good ones, just ignore them. In this respect this may be an original idea after all.

The news industry is defined by the saying: If it bleeds it leads.

Here are some excerpts from a Guardian article by Steven Pinker for more context:

In a BBC article by Tom Stafford, an academic experiment is described around how people deal with negative vs positive news. This is an excerpt from the article:

“The researchers present their experiment as solid evidence of a so called "negativity bias", psychologists' term for our collective hunger to hear, and remember bad news.

It isn't just schadenfreude (from the German words : Schaden=damage + Freude=joy, it means: pleasure derived by someone from another person's misfortune - bracket is not part of the excerpt), the theory goes, but that we've evolved to react quickly to potential threats. Bad news could be a signal that we need to change what we're doing to avoid danger.”

No one can say it better than Steven Pinker in his genius article on The Guardian:

“Make a list of all the worst things that are happening anywhere on the planet that week, and you have an impressive-sounding—but ultimately irrational—case that civilization has never faced greater peril.”

The subconscious stores everything even if we don’t know it.

According to 26 experts our subconscious stores every event, occurrence, emotion or circumstance from before we were born (i.e. from the womb... nothing metaphysical). It also fails to distinguish between real and imagined. If we keep contaminating our subconscious with negativity it will inform our future decisions influenced by this content, be it real or the product of a movie. It records everything without judgement but everything in our subconscious is part of who we are.

There are some people who avoid watching the news for this exact reason. What if we could give these people a news channel they can watch?

listening247 lives and breathes agile product development. In the world of agile a prototype is created first, to serve as a proof of concept. If the prospects seem good, then with multiple iterations it gets improved into an Alpha-, then Beta-version, and ultimately it is launched in production mode.

This is exactly what we suggest we do in this case as well. This article is almost like an open strawman proposal to all news media.

How about listening247 starts by doing what it does best: find good news online. We can create a social media daily harvester of posts with positive sentiment, in a few different languages, using our proprietary Generative AI.

We will then implement an automated stage of curation based on topics and report them on a daily newsletter and micro-site in a number of fixed columns as well as top stories and features. Here are some assumptions on the columns and features:

Let’s first see the kind of content we will get from social media listening and whether we think it has potential as a Digital TV channel. Should that be the case then maybe we can go to a VC fund or a like-minded charity foundation with this business idea and give it a go. Please contact us on X or email me with your thoughts.

.webp)

This is a short story about social intelligence (SI) and banks. The unique selling proposition of listening247, a social intelligence solution, is high multilingual accuracy for sentiment, topics and brands; unfortunately this is also one of the solution’s biggest obstacles to scale. This trade-off between accuracy and scale was consciously made by a team of people - they were market researchers and they do have tremendous respect for data accuracy, sometimes to their detriment - until one day, not too long ago, they realised scalability does not have to be a trade-off.

Normally it took 3 weeks to create new custom machine learning models every time they came across new categories and languages. The operative word is new in the previous sentence. That was their little secret on how to reach higher brand, sentiment and topic accuracy than their competitors. They realised that once they have the A.I. custom set-up (for a product category and language) done then they could be on the same footing as every other social media monitoring tool on scalability, but with a much higher accuracy. That’s when they decided to pick one industry vertical, create the necessary set-up and run with it.

The decision was not easy, there were too many variables; they created a strawman proposal and asked the question to the whole company and its advisors; after a couple of weeks and a lot of back and forth they picked the banking sector. There are many good reasons why this vertical deserves focus. They could have taken an FMCG product category or retail, healthcare, automotive or telecoms but they chose to enlighten the banks first, before they tackled the rest of the world (in their own words). Here are some of the reasons that influenced their decision:

They had to start somewhere so with the help from a high profile advisor from the industry they picked 11 major banks, mostly multinationals to use as keywords for post harvesting. Here is the rest of the scope:

Language: English

Geography: Global

Period: Past 12 months

Sources: Twitter, blogs, forums, news, reviews, videos

Machine Learning Annotations: Sentiment, Topics, Brands, Noise (irrelevant posts which contain homonyms)

Deliverables: annotated data in CSV and Excel, drilldown and query dashboards, powerpoint presentation.

For the ESG impact on bank performance for their R&D project with the University they also retrieved the daily valuations of each of the 11 banks from Yahoo/Google Finance.

They harvested 4.5 million posts for the 11 banks in English globally. The pie chart below shows the share of each source type. Twitter was by far the biggest source of posts followed by News which is the only non-consumer source, mostly editorials published by the banks by journalists or by the banks themselves.

For DB, HSBC, BNP Paribas, Santander and Credit Agricole, Twitter was the biggest source of posts. Consumers do talk a lot about their banks, especially when they have complaints. On the other hand for Barclays, SosGen, Unicredit and Intesa Sanpaolo News was the biggest source which means that their customers do not have complaints or they do not focus on engaging with them on social media.

The findings were presented for the first time to a group of board directors of banks from various countries who were taking part in the International Directors Banking Programme (IDBP) at INSEAD.

Here are some of the highlights of the report:

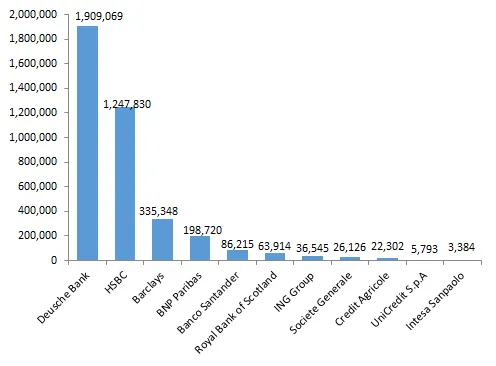

1, Deutsche Bank is ranked first in terms of Buzz (=total volume of posts) with 1.9 million posts from all sources. This represents 42% share of voice for DB which is followed by HSBC and Barclays, as you can see in the bar chart below.

2. The net sentiment score (NSS) was calculated for each bank and was used to rank them in the chart below. This is a trade marked score of DigitalMR and it combines all the positive, negative and neutral posts. RBS has the lowest score with a -3% whilst HSBC leads the pack with a +9% score. Considering other verticals or product categories the top NSS score of 9% recorded here, is quite low.

3. When it comes to topics of conversations online, financial events scored -8%. ESG scored +5% with the top topic being emotional connection. ESG seems to be a very hot topic around banks and other corporates.

4. The report can be quite granular in terms of topics and time periods. The table below shows a drill down into ESG by brand and quarter for net sentiment score. The colour coding makes it easy to pinpoint the problem areas. Deutsche Bank and RBS are the ones with the most quarters showing a negative NSS.

It looked as if the board level executives had never seen anything similar before, they viewed the results with some scepticism, they asked quite a few questions. Some of them wanted to drill down and understand more especially those of them who were with banks included in the project. The question is will they manage to get the management of their banks to integrate social intelligence in the other streams of data they have?

What makes this report credible is that we know its sentiment and topic accuracy is over 75%. This is not just a number thrown out there, it can be verified by anyone. You can extract a random sample of 100 posts, read through them, and verify with how many brand, sentiment and topic annotations you agree. By the time we publish the next short story on the banking report the machine learning models will improve themselves to accuracies over 80%.

In the next article you can expect to find out how news about governance impact the valuation of the banks. If you are wondering what other ways there are to create value for your bank from a social intelligence report like this, stay tuned; if you can’t wait two weeks reach out to me via X or email, Talk soon!

.webp)

Nope, not in this case!

Statements such as ‘XYZ ranks first on social media buzz’ can be quite misleading. In Social Intelligence, looking at the number of posts (i.e. buzz) about a brand or company is equally important as understanding the sentiment and topics expressed in these posts.

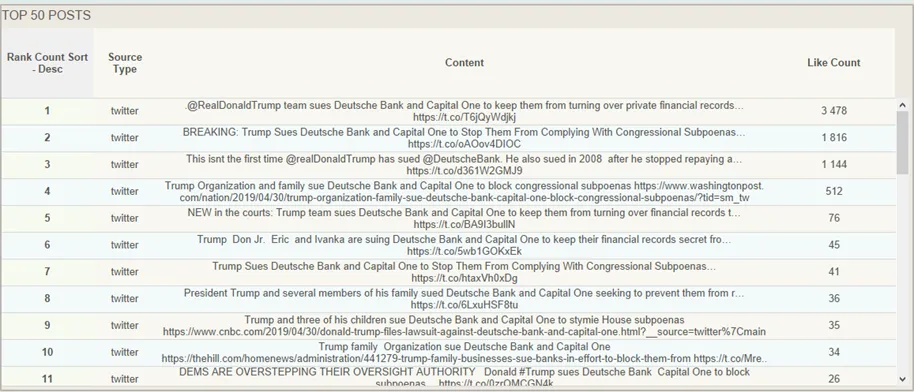

In the case of Deutsche Bank, they do indeed rank first among 10 other global banks included as part of the first listening247 banking report that listening247 launched in April this year, however many of these posts are negative and could in fact harm Deutsche Bank in the real world; in terms of valuation and bottom line impact that is.

In social listening & analytics, the starting date and the time period for which data is to be analysed is not restricted to the date one decides to carry out the project, like it would be in traditional market research (e.g. customer surveys), as we have the ability to harvest and analyse posts from the past. In this first report listening247 analysed English posts about 11 banks, found on X, YouTube, News, Forums, Blogs, and Reviews, during the 12 months of May 2018 – April 2019 inclusive.

As you can see below, Deutsche Bank with its 1.9 million posts across all sources, commands an impressive 48% share of voice among the banks.